The “visual intelligence” aspect of Apple Intelligence leverages the artificial intelligence capabilities of your iPhone to make what you see through the iPhone’s camera or on its screen interactive and actionable in ways that weren’t previously possible. It’s one of the most useful aspects of Apple Intelligence.

Triggering Visual Intelligence

We offer numerous examples of visual intelligence’s superpowers later in this article, but first, let’s make sure you know how to activate its two modes: camera mode and screenshot mode. Use camera mode to learn more about the world around you; use screenshot mode for help with something on your iPhone’s screen. Here’s how to trigger each mode:

- Camera mode: Press and hold the Camera Control button on all iPhone 16 models (except the iPhone 16e), all iPhone 17 models, and the iPhone Air. Press the Camera Control again or tap the shutter button to lock the image for visual intelligence. On the iPhone 15 Pro, iPhone 15 Pro Max, and iPhone 16e, which support Apple Intelligence but lack the Camera Control, use the Action button, a Lock Screen button, or a Control Center button.

- Screenshot mode: Simultaneously press the side button and volume up button to display an interactive preview of what was on the screen.

Visual intelligence analyzes the content of your image and provides relevant action buttons based on what it detects. While the Ask and Search options are always available, other buttons appear contextually depending on the content.

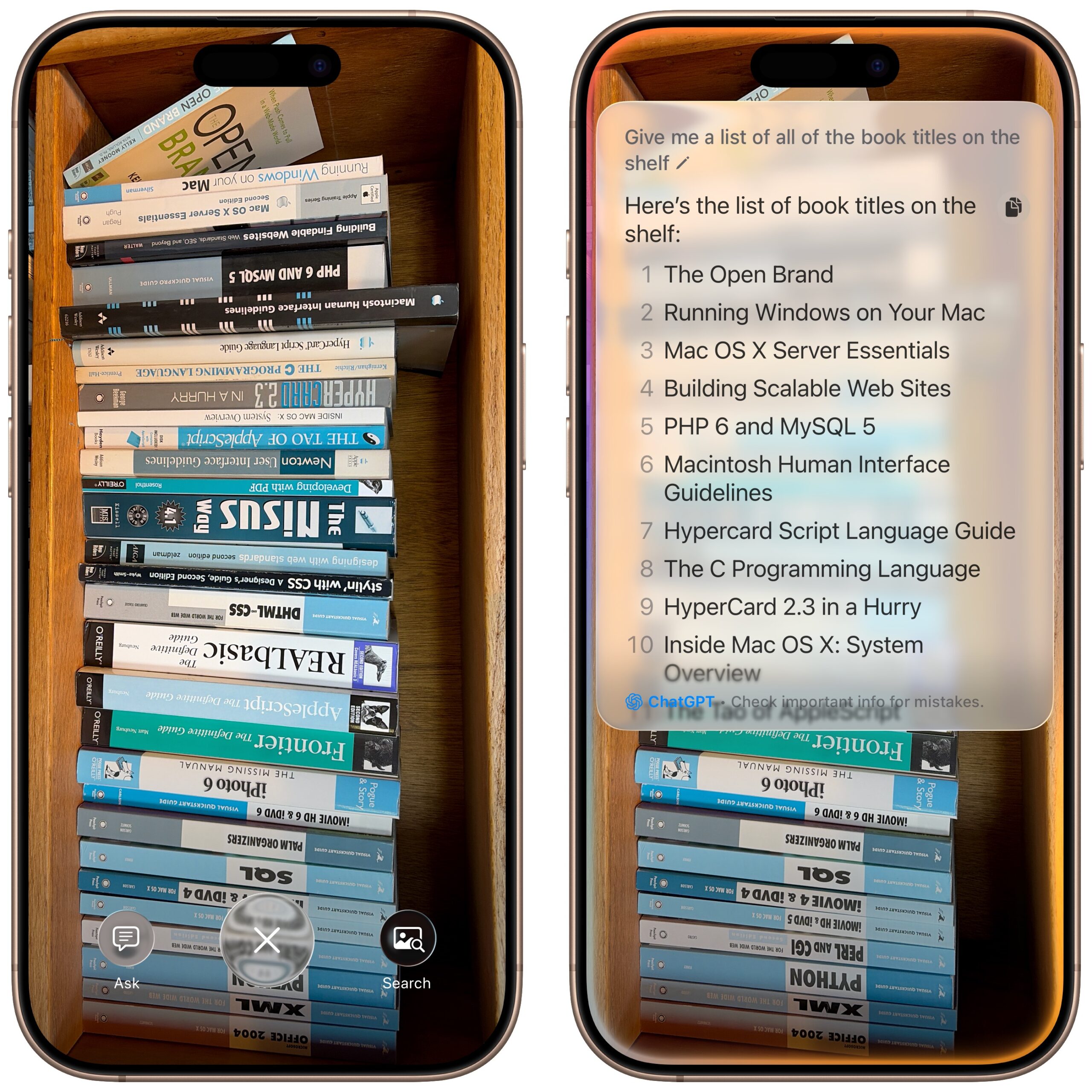

- Ask: Tapping the Ask button lets you pose a question about the image to ChatGPT. (But we’ve found that Apple won’t pass on health-related questions or queries with certain types of sensitive data.)

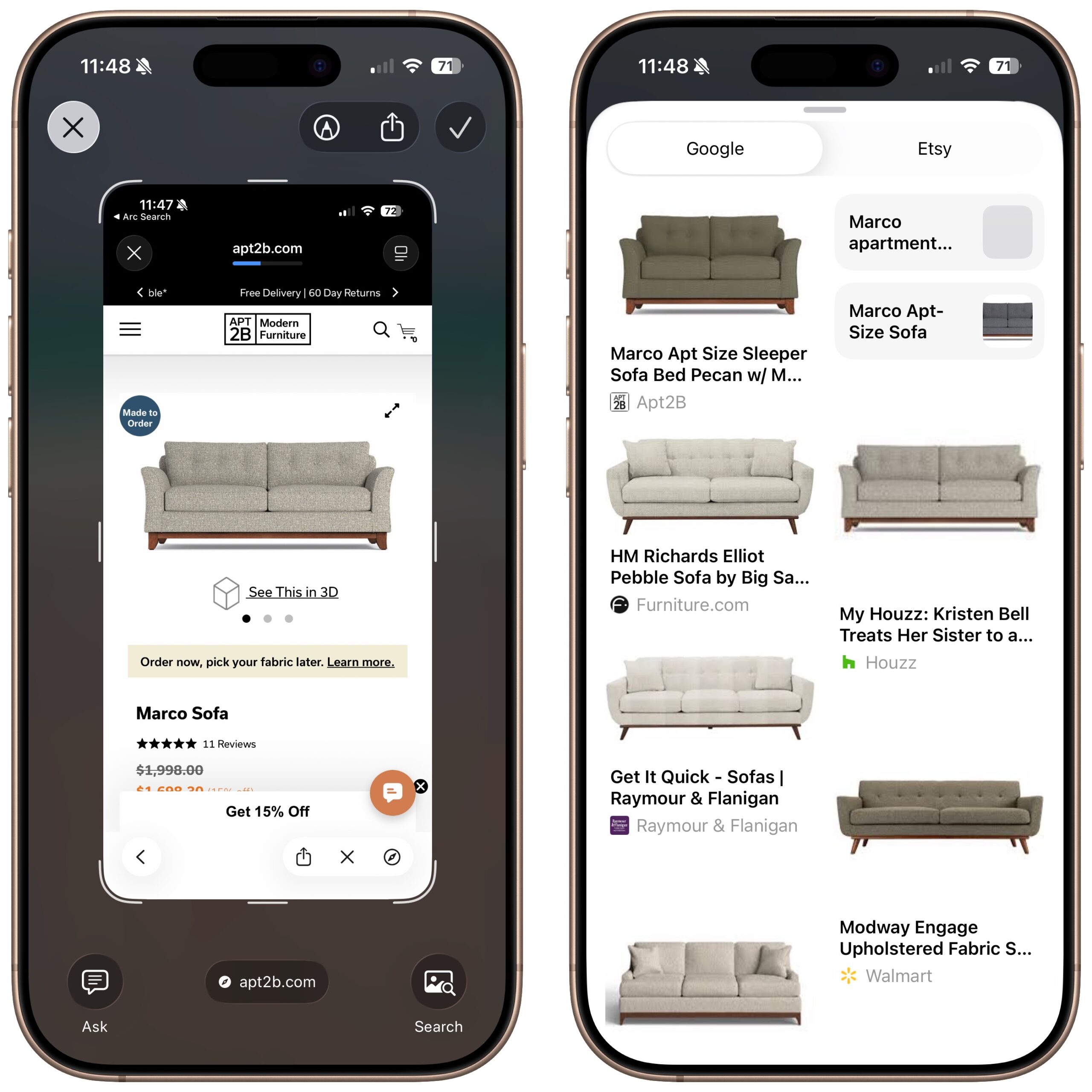

- Search: Tapping the search button conducts a Google Image search for similar items. It may also display tabs for search results from other apps, such as Etsy or eBay.

- Recognized objects: When visual intelligence identifies an object, such as a specific plant or animal, it displays a button that brings up more details.

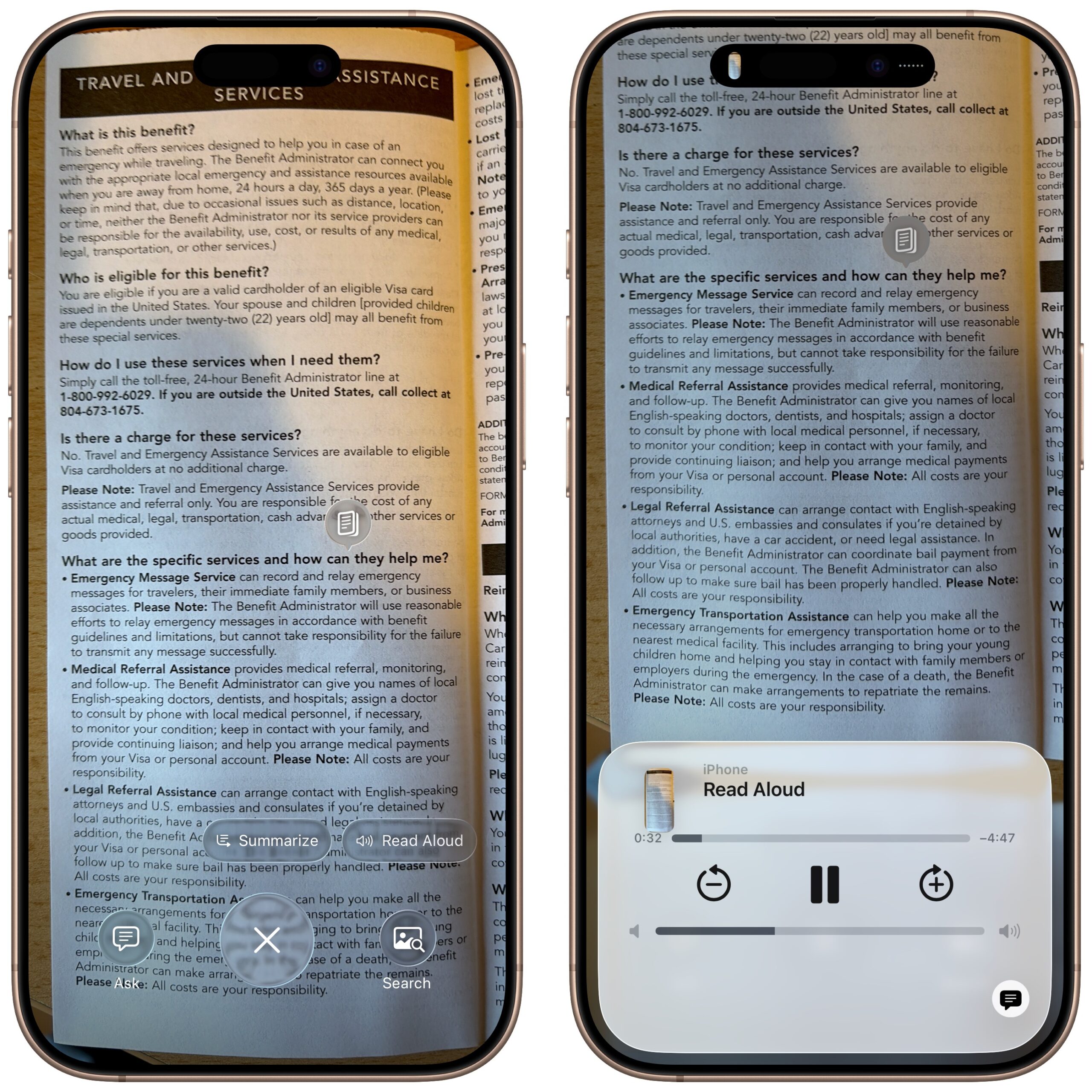

- Text: When it detects blocks of text, visual intelligence provides buttons to summarize the text, read it aloud, or translate it.

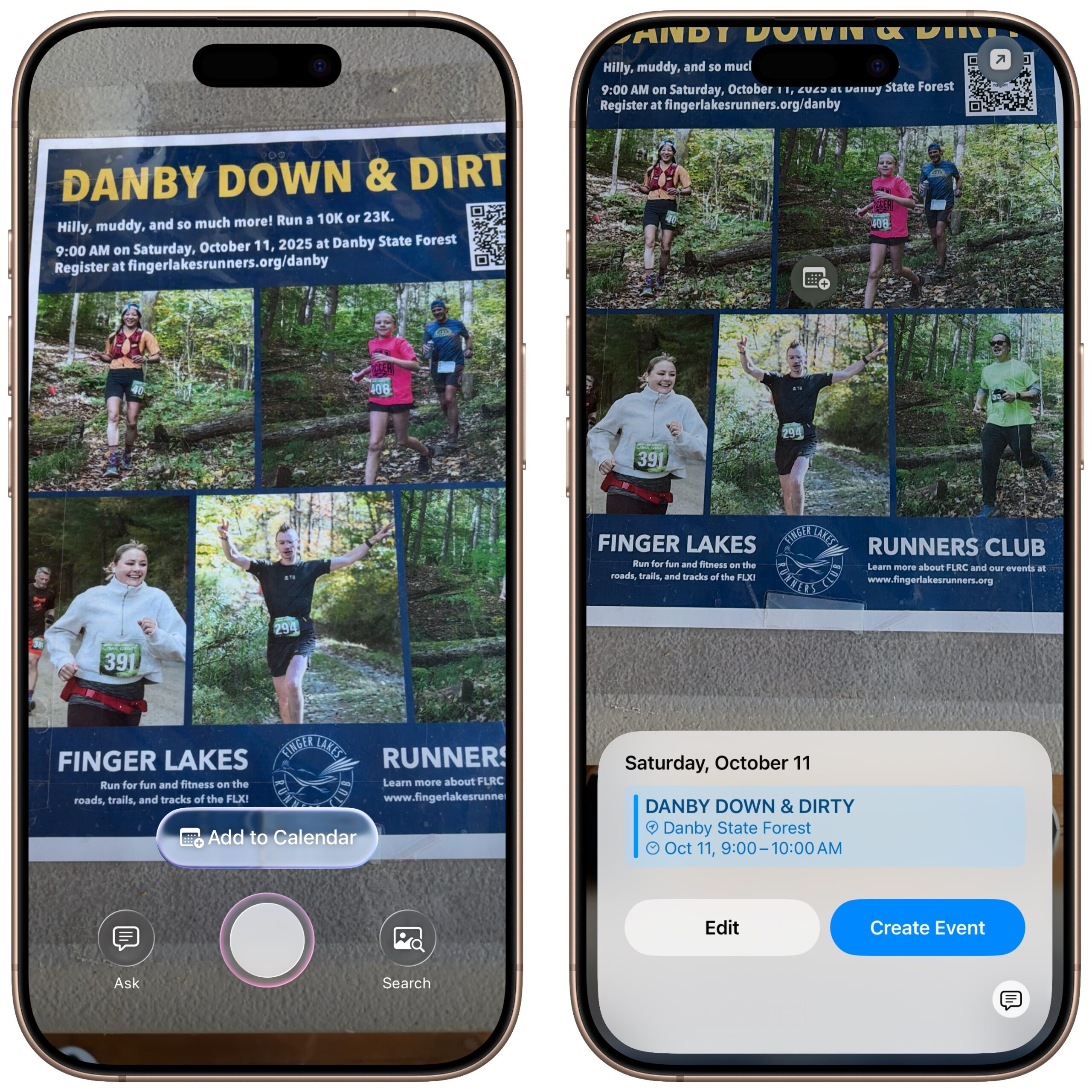

- Dates: If it detects a date in text, visual intelligence displays an Add to Calendar button.

- Contact info: When details like email addresses or phone numbers appear in the image, visual intelligence can help you call or message the number, or send email.

- Addresses: When it identifies a physical address in text, visual intelligence displays a button that opens the address in Maps.

- URLs: This works in the virtual world as well—a URL embedded in an image prompts visual intelligence to display a button that opens the website in Safari.

- Businesses and locations: When you capture an image of a business or other location that’s known in Maps, visual intelligence can show hours, menus, reviews, and more.

Real-World Uses for Visual Intelligence

It can be challenging to think of uses for visual intelligence at first, simply because it’s a new way of engaging with the world around you and what you see on your iPhone. We’re used to taking pictures of event flyers we want to attend, doing Google searches for things we see, asking questions of chatbots, and using specialized apps to identify plants and animals—visual intelligence can do all that and more. Here are a few practical ways to use visual intelligence today:

- Create calendar events: Create calendar events from posters, flyers, invitations, or Web pages. When you point the camera at a poster or take a screenshot of an event page, an Add to Calendar button allows you to create an event directly from the on-screen details.

- Find business information: Point the camera at a business to retrieve details such as hours, menu/services, phone number, and website. It’s the same information you’ll find in Maps, but it’s easier to pull up using visual intelligence.

- Search for products: Shopping for something? Once you find an example of what you like—such as this mid-century modern sofa—take a screenshot, circle the picture with your finger, and browse the search results for similar items.

- Summarize and read text aloud: When you’re faced with a large amount of text, especially if the font size is difficult to read, visual intelligence can provide a summary or even read it aloud. The option to have text read aloud can be particularly helpful for those with low vision.

- Translate text: iOS offers multiple ways to translate text in unfamiliar languages, including the Translate app, but visual intelligence is often the fastest way to get a quick translation of a sign or placard.

- Quick object identification: We’ve all wondered what some plant or animal is—using visual intelligence, you can point your camera at it to find out quickly. Just tap the name that appears to get more details.

- Research questions: Sometimes, you know what you’re looking at but have questions about it. Instead of starting a new search, you can use visual intelligence, tap the Ask button, and pose your question to ChatGPT. Tap the response to ask a follow-up question. If you connect Apple Intelligence to your ChatGPT account in Settings > Apple Intelligence & Siri > ChatGPT, your conversations will be saved in ChatGPT, where you can review and continue the discussion.

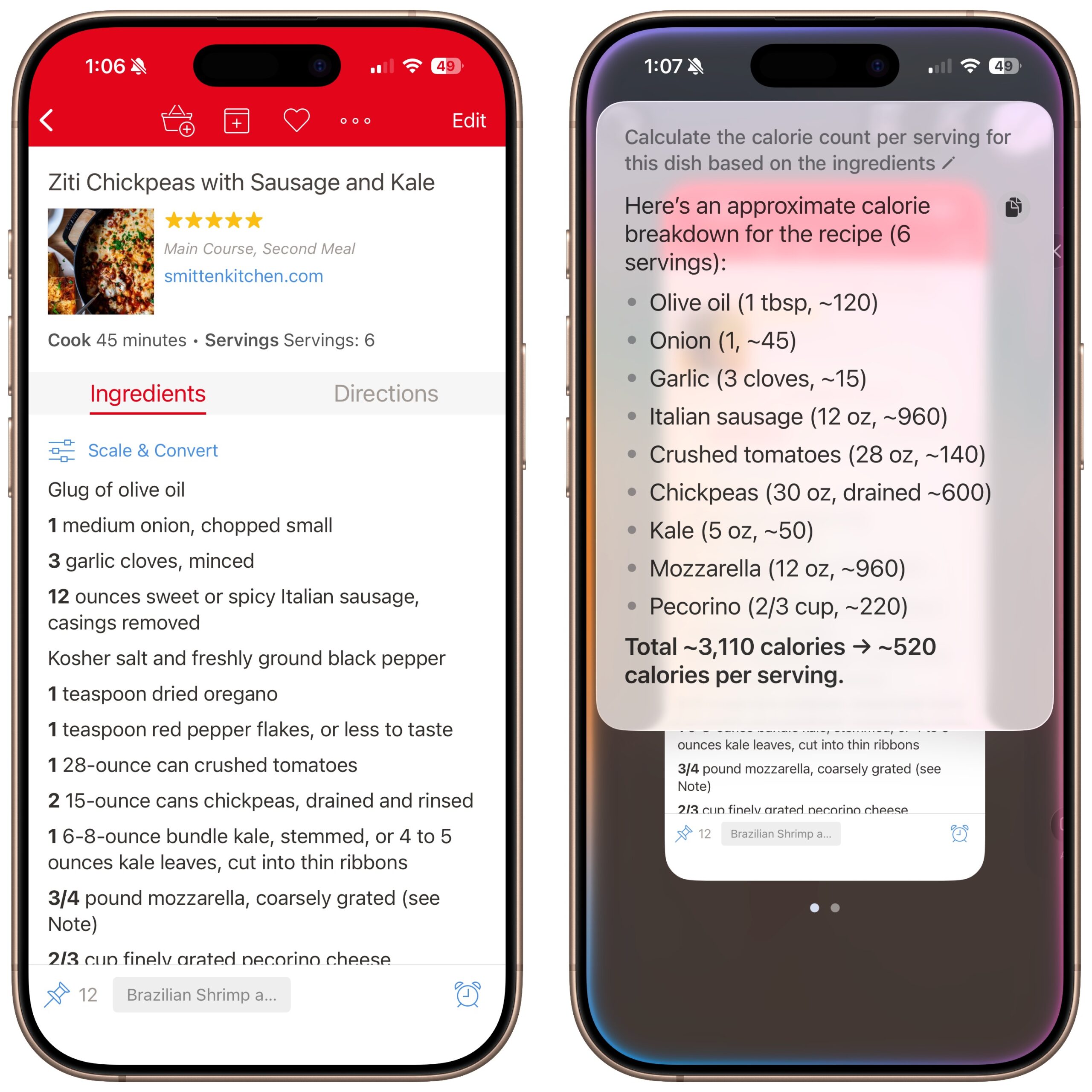

- Manipulate real-world data: Anything that can be photographed or captured in a screenshot can be used as data for other manipulations. For example, you could take a picture of a bookshelf and ask for a list of all the titles, or take a screenshot of a recipe and request the calorie count per serving.

How does visual intelligence compare to apps like ChatGPT, Claude, Gemini, and others? It outdoes them in two ways but falls short in one. Thanks to its deep integration with iOS and the iPhone’s Camera Control, it’s easier to activate visual intelligence than any other app. It also transfers data more effectively to other apps, such as sending URLs to Safari, phone numbers to Phone or Messages, addresses to Maps, and more. However, chatbot apps—which can also analyze photos and screenshots—are more conversational, offer more detailed information, and are willing to discuss potentially sensitive topics that Apple won’t touch, such as health and politics. We use visual intelligence for straightforward tasks, but for more complex situations, we often turn to a chatbot app instead.

(Featured image by Apple)